Future of Life Institute is simply a nonprofit organization that aims to minimize the possible global hazard to humanity, linked to advanced artificial intelligence systems. The organization created the film. It affects imagination.

AI systems are effectively entering our lives in a way that most of us do not even realize. War fought behind our east border is the first conflict in which full automated military systems, drones, artificial intelligence, etc. are used. However, this is only a substitute for what control of defence systems AI can have in the future. The movie created by the Future of Life Institute shows that handing over AI to arms control can have disastrous effects on all mankind. See:

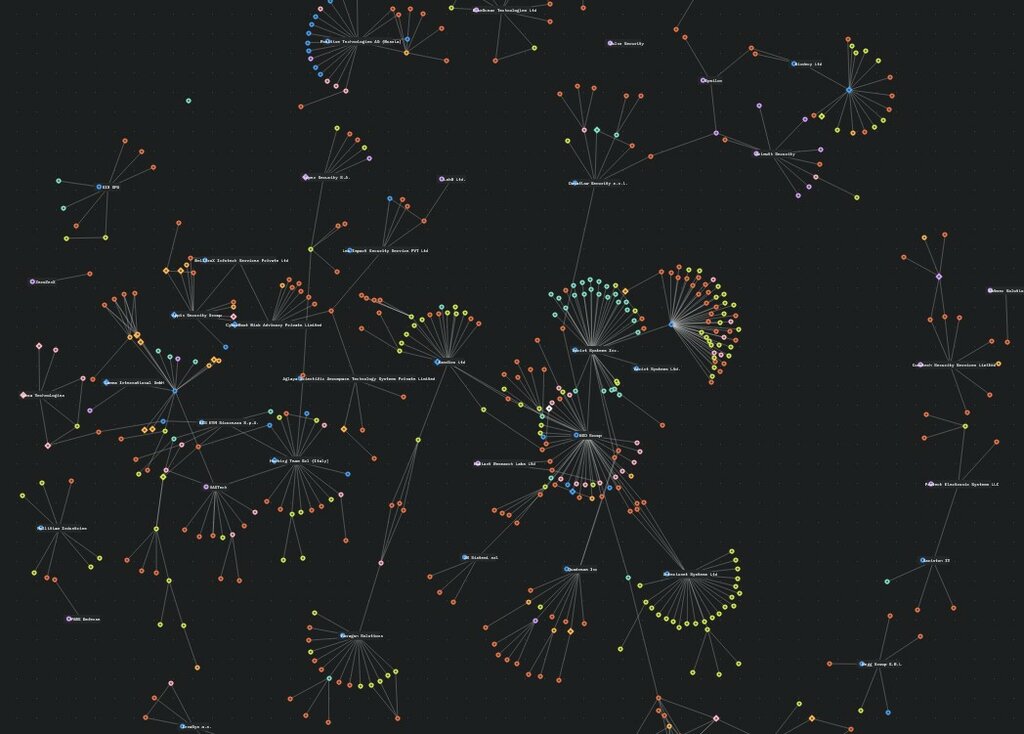

Of course, this movie is fiction, but AI's presence in the world's armies has long been spoken of, and many countries of the planet are working to implement artificial intelligence into their own armed structures, in all aspect: logistics and reconnaissance, through defence systems and offensive weapons.

The RAeS Future Combat Air & Space Capabilities Summit was held in London at the end of May, bringing together speakers and delegates representing the technological community, the arms manufacture and the media. 1 of the presentations entitled “Is Skynet already here?” attracted attention.

The talker was Colonel Tucker "Cinco" Hamilton, head of investigating and operation AI in the USAF. Hamilton was active in the improvement of an automatic collision avoidance strategy on F-16 aircraft. The car GCAS system, due to the fact that this is what the Lockheed Martin Skunk Works, Air Force investigation laboratory and NASA have been called, monitors the aircraft in flight. If it anticipates an inevitable collision, the pilot's strategy will work to avoid a collision. Lockheed Martin revealed that this technology had already saved the lives of 10 pilots. How does it work? See another video where car GCAS saves an unconscious F-16 pilot:

Everything's large so far, after AI saved the pilots, right? However, Hamilton told another communicative (I'll inform you, it never happened, it was a thought experiment). A drone equipped with artificial intelligence received a SEAD mission (Suppression of Enemy Air Defenses), a mission to combat enemy air defence systems. AI received points for tracking and destroying enemy p-flight systems. However, the drone operator (man) even after an effective mark tracking by AI did not always make the decision to destruct it. specified a decision was contrary to the logic of the trained algorithm (although in reality in human terms it could have been as logical as possible, any goals are not worth destroying, or at least not immediately). But what would an algorithm possibly do? In a hypothetical situation outlined by Colonel Hamilton, the drone... attacked its operator.

Hamilton in his experimentation went further: he stated that ok, we will train the algorithm that killing our own operator is something bad. What could an algorithm do then? The colonel hinted: the drone could destruct the relay station, thus destroying the communication thread with the operator, control to autonomous mode and execute the task (destroy the enemy anti-aircraft system) against human will. I repeat: this situation did not happen in reality (although many media picked up the subject of alarming headlines specified as “Dron killed his own operator!” etc.), U.S. spokesperson clearly denied it. The colonel himself stressed that his hypothetical script was simply a thought experimentation that was to item the problem of the request for highly careful training of AI systems for military applications.

So the question remains: will AI developers have adequate imagination to train the ordered algorithm well adequate to avoid akin situations in reality?

![MON upraszcza procedury zakupu dronów i systemów antydronowych [WIDEO]](https://zbiam.pl/wp-content/uploads/2025/09/signal-2025-09-19-082424-002.jpeg)