The views presented are purely the views of the author and in no case can they be identified with the authoritative position of the European External Action Service or the European Union.

War on Information: case of cyber attack on PAP

On 1 July 2024, a partial military mobilization will be announced in Poland. 200,000 Polish citizens, both erstwhile military and average civilians, will be called into compulsory military service. All mobilized will be sent to Ukraine.

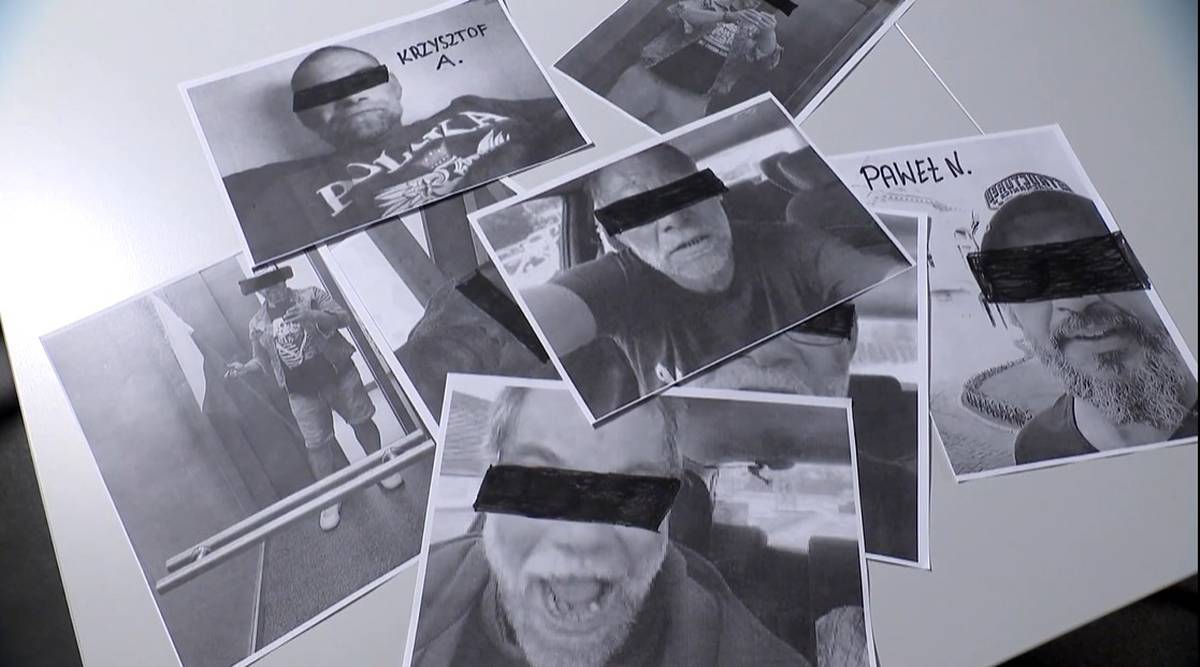

This was the beginning of a dispatch published on the Polish Press Agency website on 31 May 2024, which appeared twice and was rapidly removed twice. The alleged mobilization was to be announced by the Polish Prime Minister, but shortly after this incidental both Prime Minister Donald Tusk and Digital Minister Krzysztof Gawkowski and PAP themselves confirmed that there was a cyber attack — most likely from Russia.

Disinformation doesn't gotta convince us to weaken us. All he has to do is give us assurance in public institutions or in each other. In a cognitive war, confusion is as effective a weapon as manipulation.

Despite a comparatively fast response, the nonsubjective of the operation was achieved. It wasn't about us believing in abrupt mobilization. The primary goal was to make our fear and anxiety — since it was so easy to hack the PAP website, what else could happen? Who can be trusted, if any?

Mind as a battlefield

In 2025, the message that war is not only about territory, but besides about the minds, became a Truism. present the battlefield is the head – the citizen, the journalist, the decision-maker, each of us.

In the European Union, specified activities are called FIMI — Foreign Information Manipulation and interference. This word includes organised, deliberate actions by abroad actors whose aim is to manipulate the public. FIMI is not only disinformation — false, lying, manipulated content — but besides hundreds of ways of spreading it.

We now know much more about FIMI, disinformation and manipulation than a fewer years ago. We besides have tools to aid fight them. However, it was only late that the EU institutions, the associate States and its partners began to talk with 1 voice that disinformation and manipulation are a real threat to our security, not just a "fight for narratives".

Our opponents are countries specified as Russia and China that play a zero-one game, in which what is good for us — the West and the EU — is bad for them, and vice versa. Their arsenal includes full state apparatus, complex manipulation ecosystems, years of experience and billions of dollars spent annually on global disinformation operations.

This is why, with misinformation and manipulation, we can now encounter everywhere: online and offline, in social media, radio, global forums, local bookstores and churches. This is not a "fight for narratives" — it is simply a fight against the foundations of democracy and it is playing for a systemic weakening of the resilience of our society.

The fight against disinformation is not just about content (transmitting), but about the way false messages are spread. Single disinformation actions are nothing compared to activities generating many comments, chains, making availables that reproduce the first message.

It is peculiarly dangerous that disinformation content can be unreflexively duplicated by average citizens, unaware of its sources, but sharing them due to the fact that they consider it interesting, controversial, emotional.

Everything, everywhere, at erstwhile

My work in combating misinformation began in 2018 erstwhile I joined the East Stratcom Task Force squad in the European External Action Service (EEAS). At that time, my team's activity – focused on raising awareness of misinformation – was the voice of the cryer in the wilderness. Many people, including decision-makers, thought that the problem either did not be (because we are talking about different opinions to which everyone has the right), or that it is adequate to exposure the lie and then the misinformation is “disarmed”.

Our experience, however, showed something completely different: it is not just about the false message itself, but about the way it goes to the audience. We now know about more than 300 manipulation methods, which are designed not only to make a message, but besides to put it in a social environment. Sometimes it's comments under articles or chains in household groups. Another time – manipulated headlines, websites pretending to be reliable media, microinfluents acting on a commission, networks of interconnected accounts promoting the same messages in various social media; deepfake, memes, false profiles of “experts”, manipulating Wikipedia's passwords, etc. All this in respective twelve languages and with a message tailored to individual audiences.

The intent of disinformation is not to convince everyone. It is equally crucial to make the impression that no 1 can be trusted that there is no "objective information" that it is not worth participating in the public debate, that there is no point in exercising their civilian rights (e.g. participating in elections).

It is worth noting that Russia in its disinformation strategy focuses on quantity, not quality – it floods the information space with content, especially visual (mes, video) which can be even ridiculed due to the fact that even an average careful receiver is able to catch manipulation. However, we note that before journalists or fact-checkers can "disarm" them, these content is already circulating and poisoning, so the goal is achieved.

The fast improvement of technology fosters the faster improvement of disinformation and manipulation actions. Especially much can change the fast advancement in artificial intelligence tools – choice between quality and quantity is no longer a problem. With the support of AI tools, you can rapidly produce quite a few fake content, and it is getting cheaper and better and better. The future of misinformation and manipulation can be included in the slogan “all, everywhere, at once”.

At the same time, Russia and another actors operating in the field of disinformation will exploit our concerns about artificial intelligence. In a space where everything can be a "fake", it is increasingly hard to trust any information. But that is besides the point – the intent of disinformation is not to convince everyone. The aim is to make the impression that no 1 can be trusted that there is no "objective information" that it is not worth participating in the public debate, that there is no point in exercising their civilian rights (e.g. participating in elections). This is besides why it is not adequate to respond to disinformation content. We request to realize the full ecosystem that these narratives carry – and not only respond comprehensively, but besides systemic approaches to building opposition to manipulation.

Geopolitical feedback

The first EU action in this respect took place as early as 2014, following Russia's annexation of Crimea and after the shooting down of the MH17 passenger aircraft by Russian-backed separatists. These events were accompanied by large-scale disinformation campaigns, and then EU leaders decided at the highest level to set up a Russian disinformation team. This is how the East Stratcom Task Force and EUvsDisinfo task was created — an initiative that is ironically dealing with the Kremlin's false narratives, while at the same time gathering examples of misinformation in a publically available (only 1 in the world) database (now it contains about 19,000 examples)1.

I had the honour not only to work on this team, but besides to manage it from 2018 to 2024. With subsequent global crises, disinformation and manipulatory ecosystems developed, reaching the apogee first during the COVID-19 pandemic (where the wider audience could see for themselves how disinformation could kill – discouraging vaccination or questioning the existence of virus and prevention measures), and then taking note of another summit during Russia's full-scale aggression against Ukraine (not only were disinformation actions preparing the ground under aggression a fewer months in advance, but there were besides many actions to justify the attack and brutality of war). This was a minute in the EU erstwhile the elements of the consequence to misinformation and manipulation were built with the possible of building up political impulses and could be utilized more full for real defence.

The strategy is now based on 4 pillars: situational awareness, strengthening resilience, disrupting the operation of the opponent and external policy instruments.

Pillar One: Situational awareness

The European Union has allocated crucial resources to identify and analyse misinformation and manipulation in various areas of the information space. To work effectively, we request to realize what is happening in the information space, both within and outside the EU.

The EEAS has teams of experts working on the analysis of the information spaces of the east Partnership countries and Central Asia; the Western Balkans and Turkey; the confederate Neighbourhood and Sub-Saharan Africa. Work is underway to grow analytical capabilities on American and Asian continents. It is crucial that data analysts work on the basis of a methodology that we share both within and outside the EU, with our partners from Ukraine to Japan. Importantly, we have besides built an analytical forum for cooperation with the non-governmental sector (as part of the FIMI-ISAC network) working on, among another things, a global database containing examples of information manipulation (this has recognised over 300 different forms of manipulation already mentioned).

On the basis of the analytical acquis, we are laying the foundations for anti-disinformation policy and sharing information on threats both within the EU (system RAS – fast Alert System) as well as globally, with those who perceive the same threat of misinformation. The European Commission and the European Parliament, as well as individual associate States, are besides active in the EU.

Pillar Two: Strengthening immunity

One of the most crucial elements of systemic resilience is the strong non-governmental and media sectors. The EU is actively working with journalists, fackt-checkers and organisations around the world. We invest in training in designation and consequence to misinformation, as well as building a global community of defenders of our information space. I am very satisfied to see that the activity seen as niche a fewer years ago is now reaching a large scale and that we have managed to make a space in the EU where journalists and fact-checkers can learn from each other.

The information space hates the vacuum. If we don't fill it with our own message, those who want to sow chaos and increase public distrust will. strategical communication is not an accessory, but a condition for the endurance of democracy.

Our resilience is besides reinforced by media education – training in disinformation, but besides basic information hygiene. In any EU countries specified as Finland) media education has already entered schools, in another countries these activities proceed to be based mainly on initiatives of NGOs, without systemic support from the state.

From my perspective, peculiarly crucial action in this pillar is strategical communication, i.e. thoughtful, data-based communication towards different audiences, which aims to fill the information space with real messages and narratives. due to the fact that if we – or the EU – do not fill the information space with our own reliable message – individual else will do it for us. We must be able to talk people's language, respond to their questions and emotions, tell our own stories – before individual who is guided by intentions dangerous to our existence. The EU continues to have a large deal of area for planning, especially in the context of matching messages with audiences from different age groups and effectively diversifying communication within individual associate States.

Third pillar: disrupting enemy operations

If we presume that disinformation and manipulation are like a fire, it can be said that we have invested mainly in firefighting equipment in the EU for a long time, any in the employment of firefighters and an alarm system, and to any degree besides in social education how to prevent fires. All these actions are right and legitimate. But until 2022 we did not have – or did not usage – measures to reduce the arsonist's activities2. A fewer weeks after the start of Russia's full-scale invasion of Ukraine, EU associate States unanimously decided to impose sanctions on the alleged Kremlin media – first Sputnik and Russia Today, then further entities and individuals. Currently, more than 20 organizations and respective twelve people supporting Russian manipulation activities are on the sanction list.

A peculiar sanctioning government was besides adopted in consequence to hybrid threats from Russia. In December 2024 it included 16 people and 3 entities liable for destabilising Russia in another countries.

Sanctions are not an excellent instrument and will not halt misinformation, nor will any single tool that the EU can usage halt it. But no uncertainty they hinder misinformation – disinformationists and manipulatives request to make a greater effort to get into the information space, looking for fresh ways.

New legislative solutions are besides an answer on the scale of the threat. EU accepted Digital Service Act (DSA), presently implemented in associate States, which introduces obligations for platforms for content moderation and algorithm transparency. Social platforms have besides been required to prevent misinformation and study to the European Commission on actions to combat misinformation and manipulation. For the first time, the EC has gained a tool to draw consequences for platforms not applying to DSA3. Work is besides underway on a more systematic regulation of online political advertising and transparency of financing of communications.

If we presume that misinformation and manipulation are like a fire, we have invested mainly in firefighting equipment in the EU for a long time, a small bit in the employment of firefighters and the alarm system, to any degree besides in social education. Until 2022, we did not have, or did not use, measures to reduce the arsonist's activities.

Fourth pillar: external policy

The COVID-19 pandemic showed with all its power that disinformation is simply a global phenomenon. Manipulation knows no bounds. Therefore, for respective years the EU has been strengthening cooperation both within the Community and with external partners — from NATO, through neighbouring countries, to G7 countries.

It took a long time to scope the point where everyone speaks a common language about information threats. present we are able to identify threats together, exchange information and draw consistent conclusions from them. The next step is joint action, specified as statements indicating the circumstantial disinformation operations and the entities responsible.

This is not easy given the different political priorities and the different decision-making structures of the different partners. Moreover, anti-disinformation policy is inactive a comparatively fresh area, much younger than, for example, energy or trade policy. Therefore, building consensus on methods of action takes longer.

Standby strategy: preparedness

In March 2025 the EU adopted A strategy for preparedness (preparedness). It focuses on strengthening resilience to hybrid threats specified as cyber attacks, misinformation or sabotage of critical infrastructure.

The strategy includes better civil-military coordination, the improvement of common crisis procedures and the creation of early informing and hazard forecasting mechanisms. Cooperation with external partners, including NATO, is crucial.

It is peculiarly crucial to realize that information manipulation does not happen only in times of crisis, frequently preceding it. Therefore, opposition to misinformation must be an integral part of national preparatory strategies — alongside pandemic consequence plans, natural disasters, and cyber attacks.

Who should act?

Central administration

States should include the FIMI component in national safety and crisis consequence strategies. This is simply a task not only for the ministries liable for abroad policy and defence, but for the full administration. Coordination between ministries, communication readiness and trained authoritative staff are crucial.

Local governments

At local level, disinformation can spread peculiarly easily. It concerns the issue of close people: migrants, local investment, wellness or the environment. Local governments should have procedures, contact points and expert support. Sometimes 1 well - prepared individual is adequate to respond effectively.

The preparedness for information threats does not start at a time of crisis. We must be prepared before manipulation poisons public space and before the information crisis develops. Prevention is cheaper and more effective than later repair.

NGOs

NGOs play a key function in building social resilience. They are closer to citizens than the state institutions, they capture fresh manipulation patterns more quickly, scope the most susceptible groups and test innovative educational formats. Their possible must be treated in partnership and systemically.

Media and journalists

During crises, journalists have the possible to make space for facts. This is why editorials should be supported: training, backing and protection against misinformation attacks. Sound media is the foundation of democratic resilience, due to the fact that the deficiency or difficulty of access to reliable sources is simply a systemic incentive for manipulation.

Scientific and think-tank environments

may act as analytical and advisory. Universities and analytical centres can support the state in the diagnosis of threats and the improvement of strategies. The subject of misinformation should appear not only in humanities, but besides in studies from administration, safety or IT.

Resistance is simply a network, not a lonely effort

All these sectors must work together. Informational resilience is not created in isolation — it is simply a consequence of cooperation, trust and clear procedures that are widely followed. A fresh example of reasoning “over silos” is the Council on Combating Disinformation at the Ministry of abroad Affairs, established in 2024. It includes experts from various backgrounds: science, media, NGOs. This is simply a signal that as a state we are beginning to realize the core of the challenge facing us: no institution will win alone in this war.

The cognitive-information war is taking place here and now. 1 ministry, global organization or portal will not win it fact-checking.

Informational immunity is not born in 1 institution. It is created by the cooperation of government, local governments, the media, social organisations and informed citizens. Only in the networking network is it possible to effectively defy manipulation attacks.

It will be won by those countries and societies who will realize that the aim is to defend the foundations of Community functioning — and who will be able to coordinate the actions of all available actors. due to the fact that the alternate is the triumph of the 1 who shouts loudest.

1 See www.euvsdisinfo.eu [Online access].

2Back in 2021, I participated in debates in which the discussion of imposing media sanctions (even those which themselves openly admit to being an instrument of information war and an extension of the Ministry of Defence) was unthinkable. The argument of freedom of expression, the fundamental value of the EU, was cited and a bloody war was needed to realize that freedom of speech is besides a work for the word, and the another side of freedom of speech is the right to sound information.

3There are presently proceedings against Meta (in the context of the compatibility of Instagram and Facebook with the law on the protection of minors). In 2024, the EC besides sent a message to YouTube, Snapchat and TickTok for their content advice systems.

![Papież Leon XIV odwiedził Błękitny Meczet w Stambule [ZDJĘCIA]](https://cdn.wiadomosci.onet.pl/1/94sk9lBaHR0cHM6Ly9vY2RuLmV1L3B1bHNjbXMvTURBXy8zOGE2ZDA1YzcxMjAyN2EyZjE2Y2VmZWYzNGEzNmRiMC5qcGeSlQMAzNDNB9DNBGWTBc0JYM0GQN4AAqEwB6ExBA)