The EU Digital Services Act, commonly known as the DSA (Digital Services Act), is simply a regulation of the European Parliament and of the Council which aims at a thorough improvement of the digital space within the European Union.

This paper responds to the improvement of online platforms and social media, introducing uniform rules on the liability of net intermediaries for content published by users. The main premise of the act is that what is illegal in the real planet must besides be combated online.

The implementation of these provisions was in stages. Although key decisions were made earlier, the regulation entered into force on 16 November 2022. The largest online platforms, which scope more than 45 million customers in the Union, had to comply with the fresh requirements as early as August 2023. The full and widespread application of the DSA rules in all associate States, including smaller entities, became a fact on 17 February 2024. Since then, each country has been obliged to appoint a national digital service coordinator to guarantee that fresh standards of safety and transparency are respected.

Although the DSA is an EU regulation and is straight applicable, it requires individual countries to adopt national rules governing the competence of control authorities and the procedure for imposing penalties. In Poland, however, this process encountered a serious legislative obstacle in the form of decisions of the head of state. The president of Poland decided to veto the amendment of the Act on the provision of services by electronic means, which was to adapt the Polish legal order to the requirements of the EU Act.

The main reason for presidential opposition was not the thought of protecting users online, but the fear of freedom of speech and the way in which the supervisory authority was established and functioning. In the opinion of the President, the government proposed by the government gave besides much administrative power over content appearing on the network, which in certain circumstances could lead to preventive or arbitrary censorship of blocking user accounts.

The president argued that the mechanisms for removing illegal content must be precise and warrant a strong appeal to independent courts, and that the Polish law, in the form presented, did not supply adequate fuses protecting the constitutional right to freedom of expression.

Summary of key obligations

The Digital Services Act (DSA) imposes on the largest marketplace players, specified as Facebook and X, a number of fresh obligations to increase control over how content on the network is moderated.

- Transparency and combating illegal content

The biggest change is the introduction of a "provide and act" mechanism. Platforms must supply users with easy-to-use tools for reporting illegal content. erstwhile the service receives specified a notification, it is required to respond rapidly and to decide to remove or leave the material, each specified decision having to be justified. The user whose post was removed gained the right to clarify clearly why this happened, and the anticipation to appeal this decision.

- Resignation of profiling and protection of minors

The DSA puts large emphasis on privacy and the protection of the youngest. Services are completely prohibited from displaying targeted (behavioral) advertising to children and young people. Furthermore, in relation to all users, it is prohibited to profile advertisements on the basis of alleged delicate data, i.e. information on political views, religion, sexual orientation or health. Platforms besides request to explain in a understandable way why a circumstantial advertising has appeared to the user and who paid for it.

- Algorithms and ‘Dark Patterns’

EU government strikes unfair plan practices, called "dark patches", which aim to manipulate the user (e.g. obstructing the deletion of an account or forcing marketing permissions). In addition, technological giants must uncover the main parameters of their advice systems – that is, explain why the algorithm tells us these content. The user must besides be able to choose at least 1 view option that is not based on profiling (e.g. chronological preview of posts).

- Risk analysis and audits

The largest platforms are required to carry out yearly systemic hazard analyses. They must research how their services can be utilized to spread misinformation, election manipulation or negative effects on intellectual health. The results of these analyses are verified by independent audits and subject to scrutiny by the European Commission to prevent the impunity of digital giants.

→ develop. ed.

14.01.2025

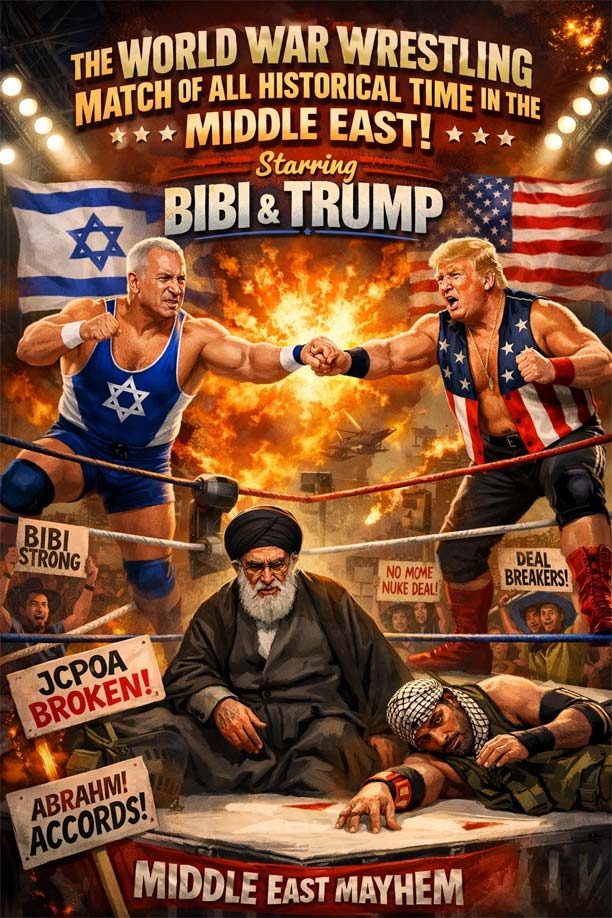

• graphics: Gemini / barma Tribunal newspaper

• source: consilium.europa.eu

• more about the EU: > Here.